The Hacker Mind Podcast: Hacking the Chrome Sandbox

In 1994, the first commercial internet browser was released. Netscape Navigator went on to be eclipsed by Internet Explore, Safari, Firefox, and now Chrome, but it helped kick start the internet-focused world we live in today. And along with that we’ve also learned a lot about browser security.

No matter how strong we build our browsers, that does not prevent hackers from trying to break new things. In this episode, one researcher explains how he successfully escaped the Chrome sandbox, and how bug bounties must just be a good thing resulting in better security for us all.

Listen to EP 07: Hacking the Chrome Sandbox

Vamosi: On October 13, 1994, the first commercial web browser launched, and, yeah, I was one of the first people in line at Fry’s in Palo Alto to get my copy of Netscape Navigator. It required that I install PPP which stands for Point to Point Protocol, which was missing in native Windows 3.1. But once I added that, I could then surf the web -- all 500 websites of it.

One year after Netscape Navigator launched, Microsoft released, as part of Windows 95, a browser called Internet Explorer, launching the infamous browser wars. In the end, Netscape would be sold to AOL and … well, disappear. But the talent that created Netscape became Mozilla which launched FireFox. Along the way came Apple Safari and then Google Chrome. The point here is that browsers today are an essential part of our lives, and browsers today are so much stronger and better today. You’re probably using a browser to download or listen to this podcast. You even might have different tabs open. What keeps the browser from crashing is that certain operations are constrained, they’re processed separately, and they’re limited by what’s known as sandboxes. But what happens when a developer makes a mistake? What happens when something malicious escapes the sandbox?

Welcome to The Hacker Mind, an original podcast from ForAllSecure. It's about challenging our expectations about the people who hack for a living.

I’m Robert Vamosi and in this episode I’m discussing how a professional hacker just happened to find a vulnerability in Google’s Chromium, and why that’s not necessarily a bad thing that he did that, and why, perhaps, hackers like this should be compensated fairly.

In 2012, Google’s Chrome fell for the first time at the annual Pwn2Own competition in Vancouver’s CanSecWest.

Narrator: Google offered to pay as much as $1 million dollars to anyone who could show vulnerabilities in its Chrome browser. And this week a French team got $60K for doing just that. Compared to peers like Safari, and Internet Explorer, Chrome has been a seemingly impenetrable web surfing fortress at Pwn2Own.

Vamosi: It was a humbling experience, and it drove further enhancements in security at Google. Chrome is still one of the most secure browsers today. But that doesn’t keep hackers from finding new and interesting vulnerabilities, and presenting them at other conferences, such as the one at OPCDE earlier this year.

Tim: That talk was about a bug that I found in Google Chrome, which I used to write an exploit to escape from the sandbox

Vamosi: This is Tim. He’s a professional pen tester, a professional hacker. He works for a security company called Theori.

Tim: Theori is a security consulting and contracting company. Primarily, where we do security audit and analysis for software vendors. And in between contracts, we like to do vulnerability research on open source software or major high-impact software targets.

The most notable example I can think of would be like Windows, or maybe iOS, where large parts of the source code are not open source, but they impact billions of users. And they have vulnerabilities in them which you could argue are a little harder to find because the code is not open source, but that's still achievable through reverse engineering or fuzzing or other techniques.

Vamosi: There are two terms you need to know up front. Chrome is a browser you run on your laptop or mobile. Chromium is the free and open-source project that runs underneath Chrome and other browsers

Tim: Chrome is based on the open source project Chromium by Google. And these days actually a lot of things are based on Chromium so other browsers, most notably Microsoft Edge is now Chromium based, but there's also a few others like Brave browser, and a few other smaller projects that are all based on the same code.

Vamosi: So think of Chromium as a striped down open source version of a browser, and then Chrome and Edge put extra features on top of that. Because it’s open source, developers can change or add code. And apparently that’s what happened here.

Tim: The bug was actually implemented by Microsoft developers where they were adding a feature for Microsoft Edge, that the feature then got ported back into the open source Chromium implementation. So it affected everything that derived from Chromium, including Google Chrome and Edge, and the other browsers.

Vamosi: So is the problem usually in the implementation or with the open source project itself?

Tim: I would say it's almost always in the open source code. There's a very small amount of code which is closed source and private to the different vendors. And though I haven't looked in great detail into the Edge specific code, partly because it's not open source. I think it's closed source. And you can only analyze it by reverse engineering the compiled code, but also there just doesn't seem to be a whole lot there that isn't just based on Chromium open source.

Vamosi: What’s true across all modern browsers is the need to break processes into smaller, more independent units. Sandboxes. These provide stability. So if something crashes within a sandbox, it won’t affect the larger system. Something similar happens with browser tabs--if something crashes within a tab, it doesn’t necessarily crash the entire browser

Tim: The sandbox in Chrome is a security boundary or mechanism that exists to prevent even remote code execution exploit -- so access that gets code running on the target computer from basically accessing the interesting parts of the system. The sandbox creates limits on what a certain process running on a computer can access, like which parts of the system, it can talk to. The goal of the Chrome sandbox, is to limit the damage that a remote attacker could do, even if they get code execution on the target computer. There's a very specific attack surface that you have from within the sandbox, where there's only a few things you can interact with. In order to escape the sandbox typically you have to use this very limited attack surface to find or find a bug in one endpoint of that and get code execution outside of the sandbox. So that's what you'd have to do with that bug.

Vamosi: So a web browser today actually consists of different sandboxes. That makes sense. As you surf the net, parts of a website are rendered in separate spaces -- in some cases the page won’t load or maybe just the tab crashes as a result of malicious code running. In order for all this to work, in order to render a full web page correctly, those separate sandboxes all have to communicate with each other. And so it’s there, in the communications framework that stitches these processes together, that Tim found his vulnerability, where an object could escape its sandbox.

Tim: Mojo is an IPC platform or IPC means inter process communications. So, basically in Chrome there's the sandbox process, which is running most of the web engine rendering stuff, and then there's the browser process which is kind of the route main processing Chrome. That coordinates all of these other processes, and they have to talk to each other using IPC, and Mojo is the platform that was built for Chromium and it's now used in some other Google projects as well for doing IPC. And so the bug that I found was related and very tied to how Mojo is used in Chrome.

It was a specific bug class, where a certain object that exists outside of the sandbox gets used after it's freed and the mechanism by which this happens involves like Mojo IPC platform, basically.

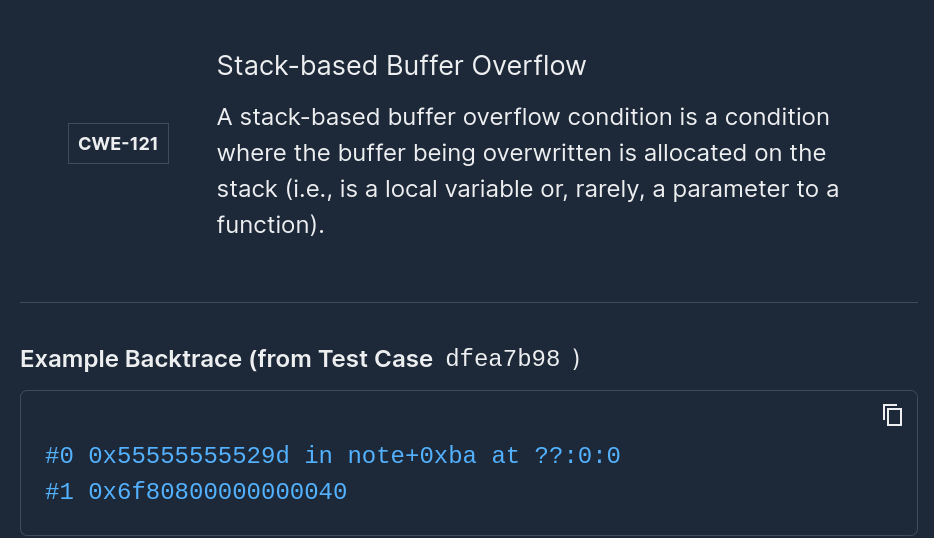

Vamosi: Use After Free is a surprisingly common vulnerability. It’s CWE 416, in case you’re wondering. Use After Free refers to the attempt to access memory after it has been freed or potentially result in the execution of arbitrary code. So it’s kind of important. It was also a lucky break. In vulnerability research it doesn’t always work this way. Sometimes you put in the work and come up empty -- no vulnerabilities. So how does one go about doing that, I mean, being there at the right time and place? I mean this was a newly added feature in Edge, yet there was Tim, waiting.

Tim: I had been looking at chromium for a while actually. Basically whenever I have free time at work, I'm looking for bugs in open source projects, or just major software and Chrome has been my target lately. And so I stumbled upon this bug class -- this type of vulnerability.

What I mean by a bug class is just a common pattern of code, which has a vulnerability in it, that is repeated in several places across a kind of base.

After reading Chrome bug reports, I decided to see if there were other instances of it, and sure enough there was one that was recently introduced with this new feature. And it just happened to be at the right time.

Vamosi: Other than this particular vulnerability, sometimes you stick with what you know works. Tim has since found a few more in the Chromium sandboxes.

Tim: Recently I reported like four or five different instances of this one bug class that I found in the Chrome renderer. So this isn't a sandbox escape but this is a bug that would get code execution in the sandbox process initially, and I found like four or five instances of the same similar bug class there that I reported recently, I don't know if the reports are public yet but.

Vamosi: Hackers don’t exist in a vacuum. There are others, sometimes looking in the same place at the same time, and when two or more hackers find a vulnerability at the same time, that’s called a collision.

Tim: Yeah, actually. With the bugs I was talking about recently, a few of the ones that I reported were marked duplicate right away because someone else had previously reported them. So it's interesting. It seemed like a few people were kind of looking into this bug class at the same time. And we were just using slightly different methods to find them, I guess. And we ended up with slightly different sets of bugs that we reported. But there was some overlap between the sets that we found. Right.

Vamosi: Pretty cool. How does one go about finding bugs like this?

Tim: Um, so I've used pretty much every technique available for finding bugs that I'm aware of. So, source code auditing -- just reading code of different components and looking for bug patterns that I'm aware of or trying to think of new bug patterns. Or, if I find a particular area that looks like it's right for a certain techniques like fuzzing or static analysis of some sort, I will try to implement those techniques on that component of Chrome.

Continuous Testing at the Speed of Development.

Find out how ForAllSecure can bring advanced fuzz testing into your development pipelines.

Request Demo Learn More

Vamosi: What’s Tim’s background in hacking?

Tim: I acquired most of my skills initially from Capture the Flag competitions, and especially lately the capture the flags have been becoming more of real world. So a lot of the challenges in CTF now are based on real world vulnerabilities, or just actually real world vulnerabilities that are now one day. So, if you want to get practice in writing exploits on real world software CTFs are actually a very great way to learn that right now.

Vamosi: You’d think doing CTFs is where he acquired his tools. That’s not necessarily the case.

Tim: Oh, actually, in CTFs it's more common that the bug is more or less obvious. It is at least in these challenges that are based on real world vulnerabilities. And it's more a test, and an assessment of your ability to write the exploit for the bug. So with respect to learning tools for bug hunting, I wouldn't say that CTFs are the best way to learn that but there's certainly a good way to learn about different types of vulnerabilities that exist, and get practice with exploiting them

Vamosi: So what leet tools does Tim use?

Tim: I just run Linux on my personal gain. Just a Ubuntu or Debian or something, but for bug hunting tools. I mean the most common one that people use is AFL, which is a fuzzing engine, which is relatively easy to get up and running with pretty much any code, whether it's open source or you just have a compiled binary. However, my favorite fuzzing engine is actually lib fuzzer, which is developed by Google as part of the LLVM project. And there you have to actually write some code, which kind of, you write a little harness for the fuzzer to attach and provide input into the code you're interested in fuzzing. But that would be my favorite fuzzing engine.

Vamosi: How do you get started?

Tim: I would say that there's a website called HackerOne, which basically organizes on bug bounties for a bunch of different software vendors, and they have a very wide selection of targets there. A lot of people I know, myself included, actually got started in bug bounties by looking for bugs in some Hacker One related vendors. Even things like looking for bugs in the Python interpreter or some web servers of some sort, there's plenty of bounties to get started on.

I wouldn't say browsers are my favorite necessarily but they're the most high impact. Currently, and they have the highest bounty rewards, probably my most interesting targets would be operating system kernels

Tim: It varies depending on how difficult things are at the given time, basically. But at the same time, browsers in particular sandbox escape tends to be the bottleneck if an attacker was trying to actually write a full chain exploit. And so the boundaries correspond to that relative difficulty and currently browsers are the bottleneck in most cases.

Vamosi: An Exploit Chain is an attack that involves multiple exploits or attacks. Hackers will usually not use only one method, but multiples, chaining them together. Browsers, with their sandboxes, create a problem. Unless, as Tim found, you have a way to escape the sandbox.

Tim: Typically with bug bounties, they prefer that you report. Right away, if you can. If the bug is currently affecting users, it's probably best to report right away, and typically the bounty vendors -- I know this is true for Google in particular -- will give you some time to finish writing an exploit for it. If they want to offer you extra for proving that it's exploitable. So, if you're reporting a bug like this to at least Google Chrome but probably other vendors. If you report the bug immediately, and then take some time to write the exploit you can still get the full reward for the exploit.

Vamosi: The alternative to bounty programs are the dark markets, the dark web where exploits have a price as well.

Tim: I don't know how much this is accurate. But I would say that there are sort of like private market prices so these different vulnerabilities, where if you aren't responsibly disclosing them and rather you're selling them for profit to some sort of exploit broker or something. There are publicly known prices for the different types of vulnerabilities and in the target software that they're in. So you can get a sense of how difficult, or important different bug classes are based on those private market prices. And typically, Google Chrome and Android bugs are the highest value currently. And I would say that that indicates that those are kind of the hardest targets at the moment. But it varies with time. Sometimes, iOS, full chains are higher value. So,

Vamosi: Paying for vulnerabilities creates some interesting possibilities. For example, there’s the idea that some hackers may be millionaires today because of what they’re finding and reporting.

Tim: I've certainly heard of examples of people that find like a nice methodology for finding similar types of bugs in all kinds of different products, and for instance on Hacker One there are public scoreboards of how much money certain users have made. And there are certainly people who have made in the millions of dollars from from bug bounties. But I would also say that, in my personal opinion, bug bounties aren't compensating well enough to make bounty hunting like a stable career for a lot of people, which I think it should be able to do. I think the security of software would benefit greatly if bounties were a little higher and more people could pursue this as a full time career

Vamosi: Fortunately, Tim works at a company that builds in time for this kind of stuff.

Tim: It's technically part of my job at Theori. Basically when we have time in between contracts were doing vulnerability research on major software and collecting bug bounties and stuff

Vamosi: That said, not everything perfect … yet.

Tim: That's right, yeah I think having a bounty program at all is great, and certainly a step in the right direction. Because, I mean it is just, It has a proven track record of improving the security of the software. But I would argue that it could be improved much more. If the incentives were a little higher for people to devote the time to acquire the general bug hunting skills but also the domain specific skills for that specific software, which takes a lot of time on its own and if it was rewarded, a little better. You could have more experts on that specific software that are finding vulnerabilities and reporting them.

Well I mean I definitely have fun doing it so I would likely be doing it either way, but so yeah I would say the bounties are just a nice addition.

Vamosi: The work that Tim and others are doing is significant whether it be looking specifically at browser sandboxes, or more generally in the operating systems we use daily. Identifying vulnerabilities early so that they can be fixed, that’s valuable. So should ethical hackers be paid for responsibly reporting vulnerabilities? I think so. And I think bug bounty programs can be a step toward a sustainable software security model, but there needs to be much more. Such as a sustainable way for vendors to take in and remediate all these bugs. There needs to be of a certain security maturity among the vendors, for example. And that, perhaps, is a discussion for another episode of The Hacker Mind.

Hey, before you go, remember to subscribe to The Hacker Mind and never miss another episode. You can find us on Google Podcasts, Apple Podcasts, Spotify, and Amazon Music … so many different platforms. Check us out.

For the Hacker Mind, I’m Robert Vamosi.

Add Mayhem to Your DevSecOps for Free.

Get a full-featured 30 day free trial.

.jpg)