The Hacker Mind Podcast: Can a Machine Think Like A Hacker?

If you’re a fan of The Game of Thrones, then here’s a little known bit of trivia. In 1970, a young science fiction writer turned chess player, George RR Martin, played with his Northwestern University team against one of the fastest computers of the time -- and the humans won. It would take another 27 years before IBM’s Deep Blue defeated world chess champion Gary Kasparov, and -- how’s this for a coincidence? -- this happened a few months after Martin published his first book in The Game of Thrones series.

Chess, with its focus on logic and strategy, with a touch of creativity, was long considered the high bar for machine learning. Having attained that in 1996, attention turned elsewhere, to self driving vehicles. Can a machine react to a variety of sensory input? Could autonomous vehicles ever think and react like a typical driver? So in 2005 the Defense Advanced Research Projects Agency or DARPA started a series of challenges to push the technology. In 2016, DARPA turned to hacking and sponsored a CTF challenge, the Cyber Grand Challenge, to see whether machines could successfully find and patch software vulnerabilities. This was an automated version of a typical capture the flag competition, and what happened there is covered in EP 03 of the The Hacker Mind. It’s worth a listen. But what happened the day after CGC? That was the day the very best human hackers were invited to play against the winner of CGC, a computer reasoning system named Mayhem. This was like Martin challenging the Vax computer at Northwestern. Or Kasparov challenging Deep Blue. And starts to answer the question, can a machine really think like a hacker?

Listen to EP 04 on:

Vamosi: Welcome to The Hacker Mind, an original podcast from ForAllSecure. It's about challenging our expectations about the people who hack for a living. I’m Robert Vamosi and in this episode I'm continuing to talk about the rise of security automation, and what we learned after first and only Cyber Grand Challenge in 2016.

Vamosi: Game Theory is an important part of the underlying strategy used by hackers when playing attack and defend Capture the Flag. It’s about thinking how your opponent might respond to an event and then planning for it. Knowing when to patch and when not to was part of the winning strategy behind DARPA’s CGC, which was modeled off the DEF CON CTF. The machines were able to attack each other and therefore had to defend against one another. But for a machine to join the otherwise human activity, changes had to be made to the human CTF game as well. Tyler, team captain of the champion Plaid Parliament of Pwning or PPP explains.

Tyler: The DARPA Cyber Grand Challenge organizers collaborated or had some agreement with the DEF CON CTF organizers, so the platform for DEFCON CTF that year was the same platform as the Cyber Grand Challenge platform.

Vamosi: Tyler has a unique view. He worked on both the Cyber Grand Challenge for two years and then went on lead PPP at DEF CON that year. What was it like to go from one competition to the other the very next day?

Tyler: This was interesting for two reasons. So, one was that they actually had the Mayhem system participate in the Capture the Flag contest against humans, which was pretty interesting and then the other was that I was participating separately on the PPP team. But I was very familiar with all the intricacies of the platform because I've been working with it for two years. Yeah, they loosen the rules a bit for that CTF and allowed some humans to work on the Mayhem team.

Alex: So because it won, the organizers of the CTF at the time spent a lot of work to make that happen and I'm very grateful that they did.

Vamosi: This is Alex Rebert, cofounder of ForAllSecure and team captain at the CGC for Mayhem.

Alex: They design the CTF based on CGC, to be able to invite the machine to play again some of the best CTF players in the world. Right. And so we came as Mayhem. Well, some of them. We're just sitting there, making sure Mayhem ran smoothly.

Eyre: After CGC, we discovered that yes, we will be playing against a machine -- the machine that won the DARPA CGC competition. And then that machine will be playing against 16 other humans. It was very, very, very interesting at that point.

Vamosi: Meet Eyre. Unlike Alex and Tyler, she was not part of the CGC team. She’s a member of PPP. And as a seasoned CTF player at DEF CON, Erye was used to learning what the rules of play would be for this year’s CTF challenge a mere 24 hours in advance of the game. At DEF CON 24, with Mayhem in the room, the changes were significant.

Eyre: We had to learn about the operating system that the machines are playing on. So basically the organizers of the CTF had to tailor the game, so that the machine would be able to play more easily and be able to integrate into the game that we were about to play. So we had to learn what the machine was doing previously and build some tools around that. Luckily, they sort of introduced the operating system beforehand so during quals there were some binaries that were written for this specific operating system so some people were able to practice and some people started thinking of tools, because it was a hint as to what's coming. So our team also prepared and wrote some tools to try to automate analyses. We also had a small fuzzing system. It was similar to Mayhem but not quite as extensive. So, we were also preparing for it.

Vamosi: Despite the best efforts of both organizers, the two systems really weren’t that well integrated.

Tyler: There were differences in the infrastructure that made Mayhem very sad because the organizers didn't really have a chance to test it.

Ned: Well, my understanding was that it was set up problems.

Vamosi: This is Ned. Like Tyler, he was a member of both the CGC and PPP teams at DEF CON 24.

Ned: Yeah, there were just some tiny misconfigurations from what I remember. As far as the competition, I don't think we got a clear picture of how Mayhem would have done if everything was was equal. So, basically, to this day, I don't know. From what I understand it solved some problems and there were just some issues with actually trying to exploit.

Alex: Mayhem didn’t do so well against actual human players.

The main win, I can say, is that we were not last all the time. We were like second to last or like third to last. During the meat of the competition, which I think is cool, but it's not something that you can put in a flashy headline. It was interesting to see what automation could do against human players. It was not an entirely fair comparison due to technical issues. And this is maybe fair. So, machines, they're not very flexible.

Tyler: So, there's a lot of kind of unfortunate things. Mayhem didn't do very well at all. I think it ended up in last place. Part of that was because it's a hard competition, whereas the Cyber Grand Challenge was, I don't remember the exact details but, it was something on the order of 100 binaries, over the course of eight hours, that was easy to medium to maybe slightly hard difficulty. The DEF CON CTF is maybe a dozen binaries or less that are all very hard difficulty. The other issue was the more logistical, which is that the organizers for the Cyber Grand Challenge were very paranoid about any sort of details leaking about infrastructure, which means that they had the DEF CON organizers implement a completely different infrastructure that was supposed to be API compatible with the Cyber Grand Challenge infrastructure, because they were worried if there was a vulnerability in one of them, then it could leak out. So they were very careful about not letting the actual contest infrastructure be exposed to anyone.

Eyre: The game was very different. All the attacking was done on the servers themselves so it was being evaluated on a separate machine. With previous games it was the IP address of your other teams and opponents, and you directly attack the services on that particular IP, send exploits and do all that stuff. But for this one, we send it to a scoring server. And the scoring server evaluated our binaries or certain exploits and figured out if our patches were meeting SLA checks. SLA checks pretty much means that the service is running as intended. So, we didn't break anything from the service itself.

Tyler: With harder challenges, it's very important to get an initial idea of what the challenge is doing based on network traffic. There were a couple other things but that was one of the issues that came up.

Alex: During the Cyber Grand Challenge and the DEF CON CTF, the system provided you with network traffic that you have service, your binaries, are seeing, right? And so, you get this network dump. And you can analyze it, let's say, to extract bugs, exploits, and make your own out of it, and that's really really valuable. A lot of exploits that people get is from just analyzing the packet that other teams are sending. Compared to the Cyber Grand Challenge spec, DEFCON CTF had the sender and the receiver bit flipped.

Tyler: So for example, you know the network traffic that we got. I think they flipped source and destination. So, when we had something reported to us, instead of seeing like PPP threw this exploit at Mayhem. Instead, it would say PPP received this exploit from Mayhem. So, for our team looking at it as humans, it's like okay this is screwed up, we'll just flip it around, but for the automated system, it's like, I don't know what's going on.

Alex: Mayhem never really understood that at all. We didn't design Mayhem to look for errors in the specification or anything like that in the game. And so that's where human help was necessarily. Like I spent a lot of time, one night, during competition to fix that in Mayhem and make sure we analyzed all of the traffic that was that we saw. And then we found like few more crash and that was not that many money so that was pretty significant. And I think that was also an interesting insight of the value of having people in the loop.

Eyre: That was the year where they they introduced consensus in the game. So consensus evaluation means that everyone that's playing the game knows the exact state of the game. Also all our patches for the different binaries are available to all the teams, so they're being analyzed. So, if a team patches a vulnerable service, we'd be able to look at what they changed, and try to figure out if they were able to successfully patch the bug or not and find other vulnerabilities against that. But with introducing this type of mechanism, it also brought the challenge where we had to analyze a lot of binaries at the same time. Because it was about 15 teams at that point. And we were receiving everyone's binaries different versions of the binaries in different states. And we have to keep track of everyone's patches so we know what kind of exploits to send each team. Being able to keep track of all those introduced an interesting problem where some kind of automated analysis might come into play.

Vamosi: Hmm. Maybe there’s a place for automation in these human games. One of the teams, not PPP, brought their machine from CGC and operated as kind of a hybrid team system. But in the end, the team finished 10th out of 15, so maybe hybrid isn’t that much of a game changer?

Alex: The difficulty of those hybrid systems is -- are they doing well because the people are good? Or are they doing well because the system is good? I know DARPA has a couple hybrid cybersecurity programs. I'm not sure how they solve that challenge.

Vamosi: So what does and what does not get automated? Having CGC and DEF CON back to back may have provided some answers, if not raised the question.

Ned: They're both really big events happening in close proximity and they're both using the same skill set. So, I do remember though that typically there's not a lot of conversation about automating in this space during CTFs. And I think people can be cynical about what can be automated, but I do remember, once people got the idea that this probably could be automated they start to think about, well what am I doing right now? That's so repetitive. And it definitely casts a shadow over some of what we were doing manually and there's kind of this big question of, oh wow, we did create an automatic CTF bot. It's one of these things where it's like, I bet if some of these challenges could have been done by a bot it's clearly superior. So there's a lot of thought about that at the time. When it comes to problem solving, I like it when there's not a lot of fluff added to a problem that you have to dig through. It's nice when a challenge is just very cleanly presented. It's just fundamentally interesting and difficult. So the fact that the CTF was formatted like CGC made it better because it basically took out a lot of the randomness that you would normally see. And it provided a standardized format for the challenges.

Vamosi: Maybe the competition was level, but the underlying fact is that human reasoning and creativity remain superior to a machine.

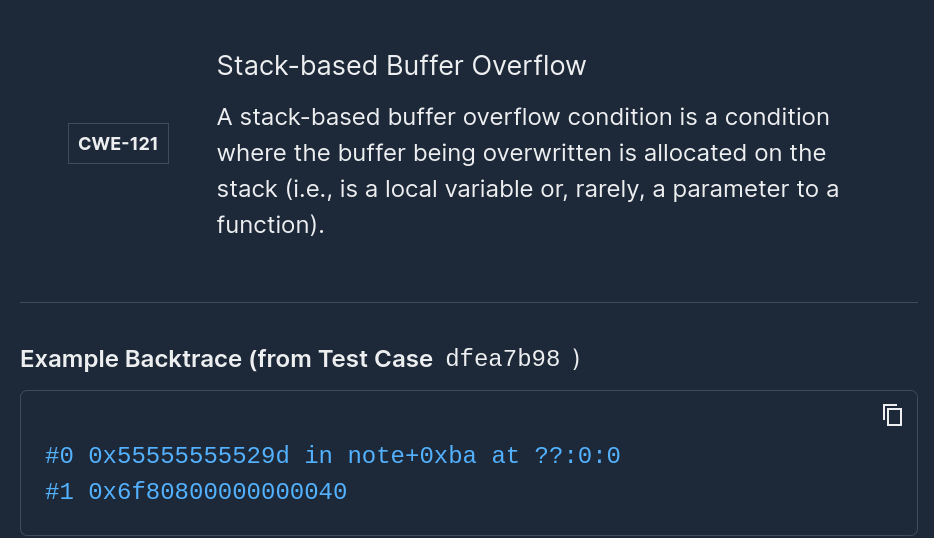

Ned: As you get closer to the final product with an exploit, things get messier and messier. And so you start out with, there's a program, here's how you input something into it, get it to crash. Mayhem is designed to explore that problem so it can provide you with evidence of a vulnerability and an input that will prove that we can get into this buggy state. But the problem is that's not enough to take that bug and then stitch it together with some pre existing techniques to actually get the end to end package that will just exploit the system. And this is something we can see in the security field, in general. The strategies that are involved in getting a working exploit versus the inputs to them, which are the vulnerabilities themselves, these are kind of two orthogonal things. So the vulnerability part hasn't changed much in the past decade. But how you actually exploit the vulnerability, that's changing yearly depending on what you're looking at. And so, those things can be automated. But I think that's where the human is really important to at least maintain such a system.

Vamosi: As Alex said, at the end of the day, machines just aren’t very flexible. Sometimes they have to be directed -- even told -- what is a bug and what is not.

Ned: You know I've seen things like sometimes a machine couldn't recognize what a bug even is. And so the human would have to encode. And in fact, that's how it works. You can have a crash, which indicates something went wrong. Other than that, a lot of the tools we use to detect vulnerabilities are really just humans having encoded when you see this thing happening, this is flagged for us and this is a highly important signal, if you ever see it it's highly indicative of a bug. And so, you need those signals. And once you have the bug, the human understands like okay well this is what that signal represents and here's how I turn it into an exploit.

Vamosi: Not all vulnerabilities lead themselves to exploitation, but sometimes you can piggy back seemingly unrelated vulnerabilities.

Ned: The canonical example not to go too technical, but generally you want to be corrupting something like a pointer. And there's this thing called ASLR where pointers are randomized so you have to know in advance. As an attacker, you don't know what value to put there. But if you have another vulnerability to read memory you're not supposed to, you may be able to steal one of those values and then modify it to then use the other vulnerability to override it. So, that's how it works is you have like, well I know to get around this. I need to crop the pointer and I know what it's gonna look like if that happens, and I know I need to be able to read something, so I need a way to annotate that. If I read something from here I wasn't supposed to have read it and those are the kinds of things that humans do today. Humans are needed to know how to encode that.

Vamosi: That said, Mayhem didn’t do too badly creating its own patches and exploits. One aspect of the CGC challenge was to share patches produced by each machine, which were then scored for effectiveness. This carried over into the DEF CON CTF.

Tyler: It was pretty funny because we knew exactly how the system works. So, one of the unique features of CGC that has since gone down to other CTFs is the idea of if I patch a service, the binary that I produce becomes public. So sometimes in CTF that's not the case. So after I patch something no one else can see it, unless they hack into my system and find it. Whereas with CGC and other CTF after CGC, they'll actually publish all of them, which makes it more interesting because you can't do stupid things where it's like, oh I added six to all the offsets and so now your thing doesn't work. It's because you'd see something stupid like that. So part of this is people will copy other people's patches, if they think it's going to be a good patch, because they're like well, if you patch the system why would I bother to spend time doing it if you already patched it. So then it goes one level further with say well I'll add a backdoor. And so if you copy my patch, you'll download a backdoored version that only I can exploit. And so, for some of the things like CGC we ended up not pushing a backdoored version, because we were too worried that something would happen and our backdoor would fail. So, there are plenty of times when as PPP, we were like, well, that Mayhem system looks like it did a really good job. Let's just take its patch, because we know it's not gonna backdoor. And we saw other teams doing the same thing which is really funny, because you get to see everyone's traffic so like well you know this other team decided that Mayhem did a good job so they're probably right so we'll just copy it too. So there were quite a few things like that that were pretty funny.

Vamosi: In the end, Mayhem really didn’t do that badly. Oh, don’t get me wrong, it still lost, but the highest scoring team that year happened to be PPP, with a final score of 133,000. Mayhem, in last place, finished with 73,000, but the next highest scoring team was only 300 ahead. That's not bad for a machine. And given how hard it is sometimes to exploit a vulnerability, Mayhem did manage to surprise even PPP.

Eyre: As far as like having Mayhem play, I thought that was very interesting, because I remember a time where Mayhem actually scored on other teams that was like whoa you know that's pretty cool a machine was scoring against these human players and I haven't seen that before playing CTF and I thought that was really cool.

Tyler: One of the cool examples that I only learned afterwards was there was some minor bug that was the ability to write null byte somewhere, which when we looked at this as PPP, we're like okay we can cause the system to crash by writing a null byte out of bounds. But we can't really do a whole lot with this. You know it seems like it'd be really annoying to try to turn into an exploit that would get us control of the instruction pointer and another register, which is what you're required to do to show you exploited it. But it turns out that Mayhem actually found an exploit for that.

Eyre: This was also very memorable from that CTF. There was a bug that George Hotz found.

Vamosi: Hang on, George Hotz, aka GeoHot, just happen to be playing for PPP that year. You may remember that at age 17 he unlocked his iPhone so that he could use any carrier he wanted to. That might not sound incredible except, he was the first person to do that. And only a few years later he reverse engineered a Sony PlayStation. This was such a problem that Sony had to shut down its Playstation service to fix it. So this same George Hotz found a bug during the CTF at DEF CON 24.

Eyre: He found this bug, and he was looking at how to exploit the bug and trying to figure out what to do. And he I remember him saying this bug looks really annoying to exploit. And so it was taking him a while to write an exploit for it and then all of a sudden, we see Mayhem exploiting the same bug and throwing it against us. I thought that was pretty cool.

Tyler: It's a pretty complicated exploit where it overwrites an address, and it overwrites the least significant byte with a null byte, which then when something tries to restore some variables off the stack, it restores the wrong variables. And now that it's restored the wrong variables, you can now re-exploit this part in a different way because all the variables are scrambled. So, it was a several stage process where it was pretty in depth. And it was pretty cool because humans were like that seems really annoying, I don't know how we're going to do that but apparently the automated system did it.

Continuous Testing at the Speed of Development.

Find out how ForAllSecure can bring advanced fuzz testing into your development pipelines.

Request Demo Learn More

Vamosi: So what, if anything, did we learn from this exercise? Yeah, it’s cool to have a machine go up against some of the best hackers in the world. But are there any takeaway from it all?

Ned: Like anything else, you have a spectrum right. So there's what humans are doing by hand and then there's when you notice that we might be able to automate some repetitive action. And then you decide it's actually worth it to go through the work to automate that because often it takes some effort. I honestly think CGC gave the funding and the incentive to actually work on that problem. I have no doubt that CTF could be dramatically more automated but there's just not really an incentive for someone to be spending that kind of effort on it. So that was the question in my head is just like right now the incentive is to push people to do things manually because it's cost effective enough. I'm staring into vulnerability research here, but a CTF team is doing it as a hobby. They're gonna try to be competitive, as humans. They're not really thinking about, or just maybe it's too difficult to spend that amount of resources to automate. So, when I said casting a shadow I started to think about that a lot more and from what I remember there were some other teammates talking about what would it be like if you could automate as much as possible and you didn't have the resource constraints, like a student group has.

Vamosi: And maybe, just maybe, hacker CTFs are just too abstract for a machine, not practical in daily life. Those who play CTFs might disagree.

Eyre: Some overlap that I do see, is being able to stare at a problem during a CTF and trying to analyze what's going on. Being able to stare at it and just keep persevering is very helpful because that directly translates to work itself, because there are a lot of unknowns in the real world. And if you just give up you won't be able to solve anything really. But, as part of my job I also do a lot of reverse engineering. So it directly translates to it, and some of the tooling in development work, so playing CTF you also do a lot of development work like setting up infrastructures, setting up different systems, to be able to play the game. That directly translates because programming is a big part of my job still. Being able to understand how binary work and how vulnerabilities work is also quite important in my job, because I get to deal with real life vulnerabilities being exploited by attackers trying to attack my place of employment, as well as the users that are using their services, and I see this stuff every day because part of my job is tracking different hacker groups, and being able to know what they're up to. What kind of campaigns they're running. So, most of the stuff that I kind of do in CPS translates very well with what I do in real life.

Vamosi: So we’re still a few years away from having a computer think like a hacker. But, like a machine playing chess, it will happen. It’s good that that we have this baseline, that we can start today to have both the technical and ethical discussions around what benefits autonomous systems will bring to information security. But will the machines ultimately replace us? Probably not. At least, I hope not. For The Hacker Mind, I am remain the very human Robert Vamosi.

Add Mayhem to Your DevSecOps for Free.

Get a full-featured 30 day free trial.

.jpg)