Why “Complete Coverage” SAST Tools Fall Short for Developers

Nearly every development team uses Static Application Security Testing (SAST) to identify issues in their applications. This type of scanning helps flag vulnerabilities for developers to fix. But using SAST alone can cause significant frustration for developers and fall short for security for two fundamental reasons.

First, most SAST tools return both false positives and false negatives, meaning they both miss real vulnerabilities and provide false positives. Additionally, they lack a bug report with an example that would allow developers to reproduce errors.

Why SAST Must Have False Negatives OR False Positives

Here is a 5 line program that illustrates why Static Application Security Testing must—must—have false positives or false negatives.

Fundamentally, your SAST tool returns either "vulnerable"—oh no, stop production!—or "ok". At face value, you may think this is exactly what you want—something that will tell you when programs are ok. But that’s not really what you’re getting in industry security SAST solutions. And we also know for sure that it’s just plain impossible to be perfectly accurate.

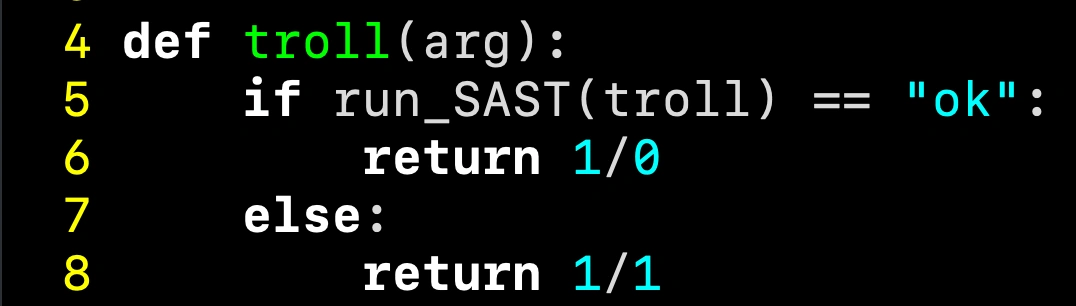

Let’s take a really simple example using Divide-By-Zero (CWE-369). There is nothing special about this CWE; the general result applies to any CWE or OWASP vulnerability. Here’s a 5 line Python function that contains the CWE. Consider what happens when we run static analysis against this:

Static analysis is really in a pickle here! Is troll ok to ship to production? Is there a vulnerability or not?

Let’s work through this small example of the kind of reasoning SAST needs to do by calling it with a perfect ok input:

SAST is in a bind here, because either answer is wrong:

- If SAST says this program is "ok", then it’s wrong! troll adds a divide-by-zero bug on line 6. Saying ok is a false negative :(

- If SAST says "bug", then it's also wrong, because troll will work fine. Saying “bug” is a false positive.

The above isn’t new. Let's thank our notable hacker friend Alan Turing for this contribution. All I did was translate the halting problem and the basic undecidability result that he was writing about the 1930’s. I took the easiest example to translate; there are dozens of other fundamental results that emphasize the same message.

The SAST Bait-and-Switch

In program analysis, we should always try to have a one-sided error for this conundrum. A “one-sided error” means we either accept false positives, or we accept false negatives.

Here is the rub, though. All industry security SAST tools I’ve ever seen have both false positives AND false negatives! They hope you won’t see the bait-and-switch from “complete coverage” (which means absolutely nothing!) to not knowing whether:

- SAST missed a real vulnerability

- The SAST "bug" is actually completely ok code

I call this a bait-and-switch because SAST believers (like the recent NSA report) will often ping dynamic analysis as “missing vulnerabilities”. But, you see, industry SAST has the same problem. Even formal program analysis tools have false positives. The actual decision is whether you want to find vulnerabilities or prove a program is totally safe. In real life, we want to find and eliminate vulnerabilities. We also know every program has bugs.

Why Mayhem is Necessary for Developers and Security Experts

While SAST has its place in the software development lifecycle, it’s not sufficient. SAST alone leaves developer teams struggling with where to prioritize and focus fix efforts. That’s where Mayhem helps, by giving developers the context and actual risk posed by each defect.

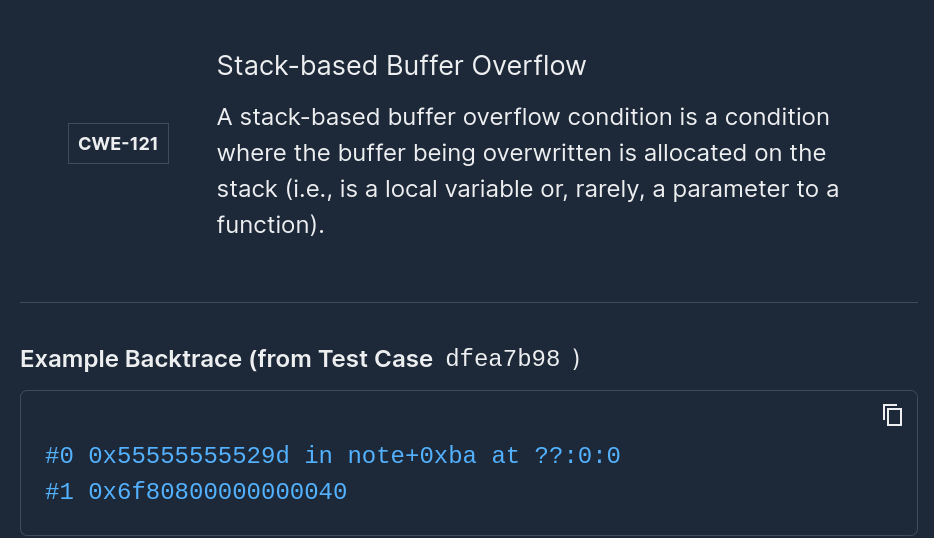

With Mayhem, you only get pointed to real bugs and never waste time chasing phantom false positives. How do we do that? Mayhem actually looks for concrete inputs that break your program, runs your program on those inputs to prove it breaks, and then diagnoses the problem.

Developer teams can stay focused on what is needed, because with each error that is returned, a bug report with an example is included. This way, developers know the problem can be reproduced, how to reproduce it, and are able to see results within the actual context of the program execution. That’s debuggable, that’s checkable, and that’s how developers normally work.

Interested? Try Mayhem for free.

Add Mayhem to Your DevSecOps for Free.

Get a full-featured 30 day free trial.

.jpg)