Uncovering Vulnerabilities In Open Source Libraries (CVE-2019-13499)

Introduction

In recent articles, ForAllSecure has discussed how we were able to use our next-generation fuzzing solution, Mayhem, to discover previously unknown vulnerabilities in several open source projects, including Netflix DIAL reference, Das U-Boot, and more. In this post, we will follow up on a prior article on using Mayhem to analyze stb and MATIO by reviewing three additional vulnerabilities found in another open source library. Prior to detailing these new vulnerabilities, we will examine some of the factors which can help to identify code which is a good candidate for fuzzing.

What Makes a Good Target?

When bug-hunting for fun, or when there’s no predetermined target, taking advantage of this freedom by putting some thought into your targets can yield better results (more defects found quicker). When tasked with analyzing a specific project, it’s often worthwhile to survey the codebase and its dependencies. Identifying parts of the attack surface to prioritize can make the process more efficient and allow you to find the low-hanging fruit more rapidly. This allows fixes to happen concurrently with expanding the fuzzing campaign.

There are a few technical considerations and qualitative factors that may influence priorities. Let’s discuss the questions users should ask that will help assess which targets are most promising.

1. Can I Build and Run the Target?

This question is fairly self-explanatory, but sometimes a non-trivial hurdle: dynamic analysis needs to be able to run the target! Although analyzing black box binaries with Mayhem is possible, building the target from source allows for inserting compile-time instrumentation for coverage gathering, which can lead to efficiency gains.

When looking at open source projects (especially C/C++ codebases) you often encounter a variety of build systems, some of which are easier to get working than others. If you are able to build and run the target, but had to jump through hurdles to achieve this, it may have dissuaded other researchers from proceeding further. This can indicate a target which has not been analyzed thoroughly, making it a ripe target for bug hunting.

2. What Language is the Target Written In?

Fuzzers are exceptional at finding issues in memory-unsafe languages such as C and C++, especially when coupled with tools like ASAN/MSAN/UBSAN. The range of security-relevant defects that users can expect to find in memory-safe languages, such as Golang or Rust, is smaller. However, fuzzing has still been effective at uncovering serious issues in Go and Rust projects. It is also fairly common for memory-safe languages to interface with C or C++ code via Foreign Function Interface (FFI), or to contain security-relevant code in unsafe blocks, meaning memory issues can still arise.

3. How Does This Target Accept Input?

To be compatible with grey-box coverage-guided fuzzers like Mayhem, a target must be able to take in input as a sequence of bytes and to do something meaningful with it which affects its control flow. This byte sequence can come from a file, a network connection, the environment, etc. Understanding the different input sources and the amount of initialization / preconditions that must be satisfied until an input is accepted is important insight into how the target will perform under fuzzing. In-process fuzzers such as LibFuzzer can simplify these issues if the target can be made compatible. Understanding what code is exposed to untrusted input is important to evaluating the impact of bugs found as well.

4. What Input Format Does This Target Expect?

Due to the types of mutations which are performed by most grey-box fuzzers, densely packed binary formats tend to perform better than highly structured textual formats. Additional logic to improve the chances of generating an input which passes initial validation can help to alleviate this at the cost of additional effort. Projects such as libprotobuf-mutator can help mapping randomly generated bytes from a fuzzer to more structured input, or custom structure-aware mutators can be developed to improve efficiency. Non-relevant input validation, such as validation of checksums or cryptographic operations can hinder fuzzer progress as well; understanding where these issues may arise can allow you to patch the target and prevent getting “stuck” behind these gates.

5. Who is Using This Target?

Understanding the downstream users of a library can help prioritize fuzzing efforts. Bugs discovered in widely used code, or code used in scenarios where patching is difficult or infrequent, usually has a higher impact. Due to the reusable nature of library code, a bug in a library can be critical, affecting a wide variety of users compared to bugs in individual applications. Popular applications that are placed in security-critical contexts are also of extreme interest.

6. Who has Fuzzed This Target Before?

Many projects that are being fuzzed for the first time will manifest many defects very quickly. This is part of what makes fuzzing new targets so addicting! Projects being continuously fuzzed may still have had only a subset of their functionality tested; exploring new regions of these codebases usually also uncovers issues quickly. Examining coverage reports of corpuses generated by previous fuzzing efforts for any major gaps in tested functionality can lead to an explosion of newly discovered defects. Analyzing results with tools such as bncov or other coverage-measuring tools can help pinpoint gaps in coverage. For more information improving fuzz testing with coverage analysis tools, check out this blog by my colleague, Mark Griffin.

7. What Quality-Assurance Measures Are in Place for This Target?

Does the target have a test suite? What coverage does it achieve? Are static analysis tools / linters used? Are these tools run continuously? Asking these questions and looking for gaps can help to inform where bugs can be found and what types of bugs you expect to find. In addition, consulting unit tests are a good way to determine proper library usage in addition to the target’s documentation.

FreeImage

As part of our efforts to integrate and analyze open source projects with Mayhem, we analyzed the open source image parsing library FreeImage, and found the following vulnerabilities:

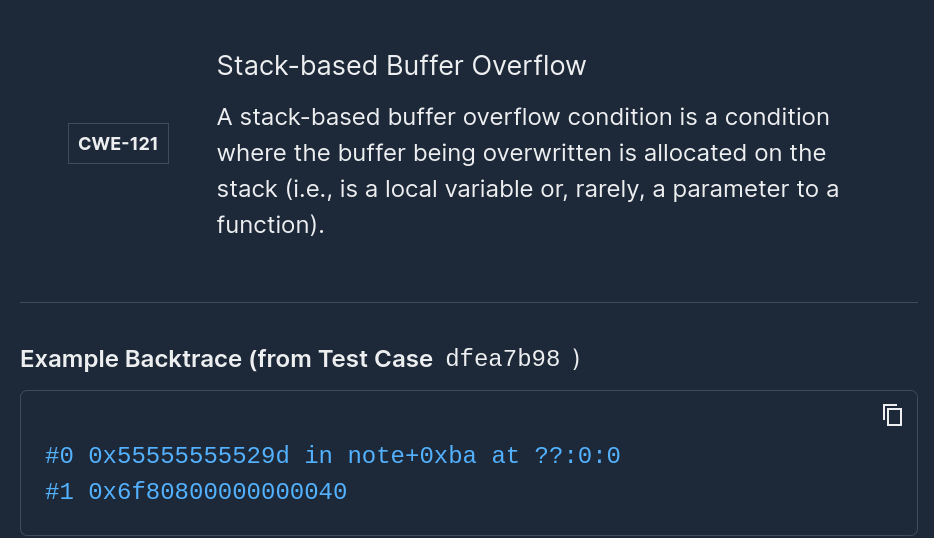

- CVE-2019-13499: A heap buffer overflow caused by a negative-size memcpy/memset in psdParser::UnpackRLE in the psdThumbnail component of FreeImage 3.18.0 allows an attacker to cause a denial of service via a crafted PSD file.

- CVE-2019-13500: A heap buffer overflow in psdThumbnail::Read in the psdThumbnail component of FreeImage 3.18.0 allows an attacker to cause a denial of service or execute arbitrary code via a crafted PSD file.

- CVE-2019-13501: A heap buffer overflow in psdParser::ReadImageLine in the psdParser component of FreeImage 3.18.0 allows an attacker to cause a denial of service or execute arbitrary code via a crafted PSD file.

The impact these vulnerabilities has is mostly dependent on the usage of the library by a host application. However, an application using an unpatched version of FreeImage that reads PSD files from untrusted sources may potentially allow an attacker to execute arbitrary code.

What to Target?

As a case study, let’s evaluate FreeImage using the criteria from section 1:

- Can I build and run the target?

- Yes, they use a standard Makefile, making it easy to get running.

- What language is the target written in?

- A combination of C and C++.

- How does this target accept input?

- It parses image files and can also parse image data from a memory buffer (convenient for in-process fuzzing / LibFuzzer).

- What input format does this target expect?

- A whole bunch! Some of these formats use other third-party libraries with functionality re-exposed under a common interface. One that sticks out is PSD (Adobe Photoshop) files, which uses a custom parser developed for this project. PSD is a notoriously difficult format to parse, which indicated to me that this is a ripe candidate for fuzzing.

- Who is using this target?

- I first heard about it as a recommendation to C++ developers in lists such as Awesome C++. It is packaged in Debian and in derivative Linux distros such as Ubuntu. Looking at its rdepends, we see several recognizable projects that list FreeImage as a dependency, including ArrayFire, CEGui, Gazebo Simulator, OpenCASCADE, FreeCAD, and OGRE.

- Who has fuzzed this target before?

- Recently reported vulnerabilities indicate that someone else probably is fuzzing this, but no one else has publicly posted fuzz targets for this project at the time of this writing.

- What quality assurance mechanisms are in place for this target?

- They have a test suite, but no tests for the PSD parser! The project is hosted in a CVS repository on SourceForge, last released on July 31, 2018, and development appears to either have been halted or is very inactive.

From a glimpse at the code, documentation, and project page, we have found a good candidate target to fuzz, namely the PSD parser inside of FreeImage.

Fuzz Testing is a Proven Technique for Uncovering Zero-Days.

See other zero-days Mayhem, a ForAllSecure fuzz testing technology, has found.

Learn More Request Demo

Packaging the Target

Following the same general approach for fuzzing libraries with Mayhem (described in detail here), after consulting the documentation and library usage from the test suite we came up with the following LibFuzzer target:

We can compile and link this against FreeImage 3.18.0, the latest version at the time of this post, and analyze the resulting package with Mayhem.

Disclosure Process

ForAllSecure attempted to reach out to the maintainers of FreeImage. Unfortunately, they did not respond to our efforts to disclose these vulnerabilities via email or to our efforts to reach them via their mailing list or SourceForge issue tracker. We are disclosing details of these vulnerabilities now in accordance with our vulnerability disclosure policy. Outlined below is our disclosure timeline:

- 6/10/19 - First attempt to contact maintainers contacted via email listed on SourceForge page

- 6/13/19 - Reached out to Debian Security Team about vulnerability in package with patch

- 7/1/19 - Second attempt to contact maintainers via email

- 7/11/19 - Post made to FreeImage developer form on SourceForge asking for contact to disclose vulnerabilities

- 12/11/19 - Emailed Debian Security Team again prior to publishing this post

Patches for the vulnerabilities are available here.

Conclusions

When considering the question of “what should I fuzz?” or the related question “what should I fuzz first”, doing some upfront analysis can help to maximize your efficiency and results. Understanding the attack surface and security posture of a potential target can help inform decisions on what to prioritize when surveying targets to fuzz. Once appropriately packaged, fuzzing a target is a mostly automated process with the manual effort being exerted up front, meaning that you can continue to explore new targets and code while the most promising targets are being analyzed by the fuzzer. By making good judgements and prioritizing effectively, you can find defects quicker, and parallelize the work of patching them with expanding coverage (informed by results from prior runs). The amount of effort put in to pick a promising target up front is rather minimal, and can pay dividends over time.

Have questions? I’d be happy to answer them personally. I'll be at Shmoocon with my colleague Mark Griffin, who will have a 50-min speaking session. We’d love to meet. Find us at Mark’s session on “Knowing the UnFuzzed and Finding Bugs with Coverage Analysis”, as a part of the Build It! track, or at the ForAllSecure booth. See you there!

Add Mayhem to Your DevSecOps for Free.

Get a full-featured 30 day free trial.

.jpg)