New To Autonomous Security? The Components, The Reality, And What You Can Do Today

Autonomy is just another word for automating decisions. And we can make cyber more autonomous. This has been proven in in-depth scientific work in top-tier research venues, a 2016 public demonstration by DARPA (the Defense Advanced Research Projects Agency), and new industry tools.They’ve all proven that we can replace humans – or at least make them more productive – in cybersecurity by replacing manual human effort with autonomous technology. However, it is important to note that the primary focus of research is to show something “can” be done, not what “should” be done.What are the parts of a fully cyber-autonomous system? What can you add today to your toolbox to make your cybersecurity program more autonomous? Read on to learn the 4 key components of a cyber-autonomous system, what’s been shown, and what you can do today.

The Challenge

In 2014 the Defense Advanced Research Project Agency – DARPA – issued a challenge: can researchers demonstrate that fully autonomous cyber is possible? They dubbed this challenge the “Cyber Grand Challenge.”

DARPA is no neophyte. DARPA funded and led the development of the original internet. Previous grand challenges, such as the autonomous car challenge, have shaped the technology we find in Tesla, Uber, and ArgoAI. They wanted to pursue the same for cybersecurity.

$60 million dollars later, the results are in. Yes, it is possible to do fully autonomous cyber – at least in theory and in DARPA’s defined environment. Participants demonstrated autonomous application security by showing how systems can find vulnerabilities and self-heal from them. (In later posts we will talk about network security.)

DARPA’s purpose for this challenge was not to show an application or system is secure. They found this to be incorrect thinking; cybersecurity is not a binary state of being “secure” or “insecure”. Rather, they posited that security is about moving faster than your adversaries. We must autonomously find new vulnerabilities, fix them, and decide how to move faster than our ever-changing threat landscape.

Just as they had with the autonomous vehicle challenge, the DARPA CGC gave us a glimpse into the future. Each successful participant in the challenge utilized 4 key components in their solution, which suggest the criteria organizations should consider as they aim to add autonomous cyber technology within their toolbox.

The 4 Components of an Autonomous AppSec Program

Autonomous security systems make decisions that were previously left to humans. Decisions such as “is this code vulnerable?” and “should I field this patch?” are questions every security and IT professional must answer on a near-daily basis.

The fully autonomous systems fielded at the DARPA CGC had four main components operating in an autonomous decision loop:

- Automatically find new vulnerabilities.

- Harden or rewrite applications automatically to prevent them from being exploited.

- Measure the business impact of fielding protection measures.

- Field any defenses that meet the business impact criteria that helps beat opponents.

The autonomous decision loop continually and continuously went through each component.

Hunt

The goal of hunt is to find new vulnerabilities before adversaries. There are many technologies today in AppSec. Which are important?

First, a general principle: you want technologies that have low or no false-positives. A false-positive is when the hunt component misidentifies a vulnerability when the code is actually safe in reality. False-positives can be deadly to autonomous AppSec programs. When systems have high false-positive rates, they can neither decide what is a real problem nor accurately justify a fix that costs time or performance.

I group today’s existing technologies into four categories:

- Static analysis. Static analysis is like a grammar checker, but for source code. Static analysis looks at the code and tries to flag all possible problems. Sounds great, right? The challenge with static analysis is that it has high false-positives rates. While a valuable technique, its high false-positive rates disqualify SAST as a viable option for an autonomous process and system.

- Software Component Analysis (SCA). SCA looks for copies of known vulnerable code by verifying whether or not you are running an outdated copy of a crypto library. SCA typically are accurate, thus good candidates in enterprise. However, I did notice that SCA didn’t perform a large role in DARPA’s CGC. The reason: the CGC applications were all custom written from the ground up and therefore did not use existing components.

- Automated known attacks patterns. Tools like Metasploit automate the launch of known attacks. They are key tools in penetration testing and can be highly accurate. However, their shortcoming is that they only check for known vulnerabilities.

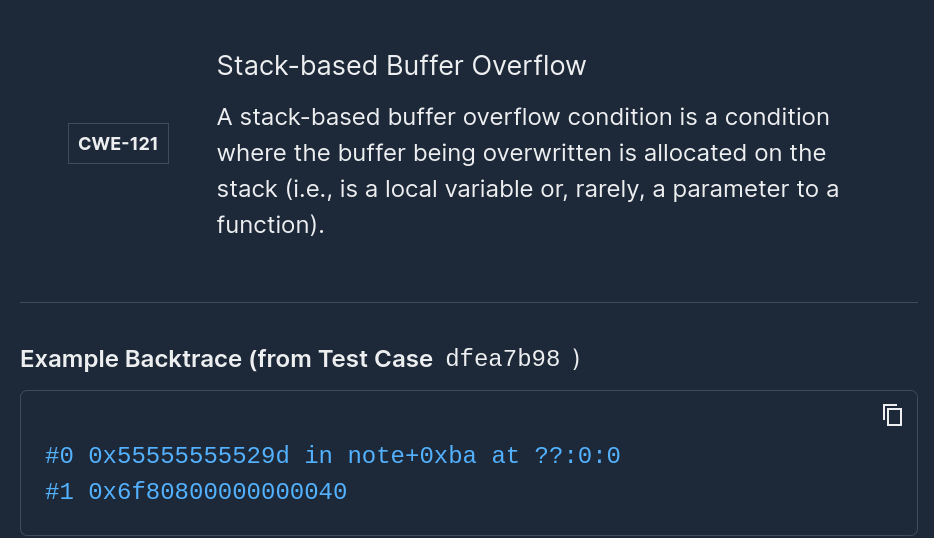

- Behavior analysis. Behavior analysis includes techniques such as dynamic analysis, fuzzing, and symbolic execution. Similar to SAST, these technologies find new vulnerabilities. Interestingly, behavior analysis was a key component used in every autonomous system in the CGC. Unlike SAST, it does not try to find every known vulnerability in a single pass. Because behavior testing generates a test case to prove the vulnerability can be triggered, every vulnerability reported is actionable.

Certainly, adversaries will try the known attacks first. Thus, my recommendation is to use SCA, if you don’t already. I recommend the same for testing known attacks patterns.

However, if you want to find new vulnerabilities before adversaries, you need to add behavior testing. In a nutshell, behavior testing attempts to guess new inputs that trigger new code paths – ones where a latent undiscovered vulnerability may lie. The output of behavior testing is a test suite, giving users inputs to triggered vulnerabilities as well as a regression test suite.

Behavior testing works. New products are entering the market, enabling organizations to automatically perform behavior analysis on their products. For example, Google’s OSS-Fuzz project has used fuzz testing to find 16,000 actionable bugs in Chrome — all autonomously and with zero false-positives. Microsoft has also used fuzzing for their Office Suite to weed out bugs previously missed by their static analysis tools.

Protect

Autonomous protection means changing an application to better defend against identified vulnerabilities or classes of vulnerabilities. There are two classes of techniques for autonomous AppSec protection:

- Hardening the runtime of an application so it is resistant against attackers – regardless of the vulnerabilities within them. Examples include industry RASP products.

- Patching vulnerabilities by intelligently and automatically rewriting the source code. Automatically patching compiled executable programs (think compiled C/C++) was a major innovation in the CGC. There are no industry products that do this today.

Although auto-hardening and protection technology is new, I believe it is worth evaluating. RASP technology promises to harden applications in a production network. Sadly, auto-patching is still very theoretical and not ready for prime time (yet).

Evaluate

The unsung hero in security are the people who determine whether a patch can be fielded without hurting the business. A 2019 survey showed 52% of DevOps participants found updating dependencies – like those found with SCA – “painful”. I believe this pain can be alleviated with automatic evaluations.

Evaluations should measure risk of updating dependencies by answering the following:

- Did the fix prevent the security bug from being exploited?

- What was the performance overhead? In CGC, winners used the 5% cutoff – meaning if protection had more than 5% overhead, they did not autonomously field the patch.

- Was there any functionality lost with the defense?

Thought there is currently no solution for automatic patch evaluations, users can leverage test suites generated by behavior testing tools to evaluate the criteria above.

Act

The goal of autonomous security is to win, not to prove security. Proving security is an academic exercise. Winning is about acting faster than your adversary. After all, real-life security is a multi-party game, where you and your adversary are both taking and responding to actions.

The most intimidating step is creating an infrastructure for automatic action. Naturally, people want a human in the loop. I believe humans, though, can be the slowest link. With sound practices for automatic evaluation, organizations are enabled to use scientific data for making high-quality actions. As a starting point, I recommend rolling out standards that allow your team to autonomously roll out a protection measure. For example, a fix that has less than 5% overhead and does not impact functionality is reasonable to autonomously field in many enterprise environments.

The right mindset is critical when adopting autonomous AppSec.

- Continuously hunt for new vulnerabilities while the application is fielded. Don’t wait until you prove the app is secure before fielding.

- Use AppSec techniques that have zero false-positives. Don’t be afraid of solutions that may have false negatives. Newer techniques in behavior analysis, like fuzzing, are excellent fits.

- Investigate tools for automatically hardening or protecting your applications, such as RASP.

- Use data to make decisions, not subjective human judgement. You do not need new machine learning or AI tools. You just need to automate the policies and procedures that are right for your organization.

- Cybersecurity is about moving through the find, protect, evaluate, act loop faster than your adversary. It’s not a bit that’s “we’re secure” or “we’re vulnerable.”

Originally published at CSOOnline

Add Mayhem to Your DevSecOps for Free.

Get a full-featured 30 day free trial.

.jpg)