3 Reasons Developers Should Learn to Test Like a Hacker

Whether running vulnerability scans or kicking off dynamic testing during the CI/CD process, the new normal is to find and fix errors before applications ever reach production. This creates one unified Dev/Sec/Ops pipeline that lets developers move fast, deploy quickly, and deliver secure applications.

At least, that’s the promise. And while there’s no doubt we’re closer to that world than we were ten years ago, the reality is that security hasn’t really integrated smoothly into the world of the developer. With the rise of a “shift left” approach to security, more and more of the burden of delivering secure applications has been put on the developer. When application security shifted left, it brought with it the legacy approach of looking for risks, relying on pattern recognition, and enumerating potential flaws.

This approach has left developers underwater, spending up to a third of their time building tests, reviewing results, and triaging false positives, which forces them into a tug of war between speed and security.

Solving this problem—and unlocking velocity and security—requires moving from testing like an auditor to testing like a hacker or adversary. By bringing the techniques used by hackers into application security tests early on, developers can start quickly identifying security issues and remediating and delivering more secure applications without the overhead of traditional AppSec approaches.

What does “testing like a hacker'' mean?

In short, hackers don’t care about risks. They’re focused on exploiting a defect, not identifying a vulnerability or weakness. So when we say “test like a hacker”, we mean testing with the goal of generating exploitable defects, then using those to inform remediation efforts by developers.

Once solely the realm of penetration testing teams, techniques like fuzz testing and symbolic execution (and, dare we say it, artificial intelligence) have made these approaches fast and accessible, which means they are easily integrated into developer pipelines.

Here are three reasons developers should "test like a hacker":

1. Stop wasting developer time on false positives.

There’s no bigger frustration in all of security than the false positive. With estimates that over 75% of alerts are false positives, it’s no wonder that security is largely an exercise in separating the signal from the noise.

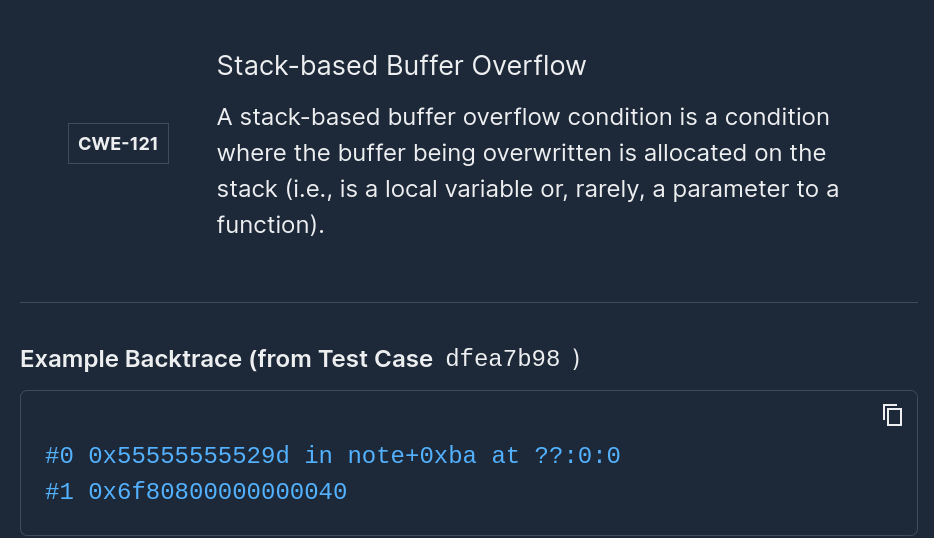

Source Code Analysis or Application Testing tools that return lists of CVEs and CWEs are no exception. There’s no development or release velocity increase when developers spend their time trying to figure out what vulnerability feeds to trust and separating real defects from erroneous results.

When using hacking techniques in application security, this problem simply doesn’t exist. Goal one of a test is to produce an exploitable defect—something provable. Goal two is to then unify that exploit with CWE or CVE information to provide additional context. By focusing on the exploit—not the risk—you’re ensuring that each result is something actionable and eliminating the time spent on false positives.

2. Actually measure your application security.

The challenge with audit-based security tests is they ultimately return a list of possibilities. You might be vulnerable due to this CVE, this CWE might surface when your application runs. There’s no way to measure the impact that patches and remediations have on overall security using this method, because patching a vulnerability that would never be exploitable doesn’t do anything to bolster security.

Alternatively, if you adopt an adversarial testing approach and try to hack into your own application, every identified defect represents a security gap. And every fix has a measurable improvement: one fewer possible exploit against your code. This lets development teams and security teams alike easily show the impact of fixes and demonstrate the value of robust Application Security Testing.

3. Get ahead of the next zero-day.

Let’s be clear: Fully preventing zero days isn’t a realistic (or reachable) outcome. But zero-days are found by hackers who aren’t worried about what’s known. They care only about how to find an unknown defect and weaponize it into an exploit. So when our application security tools rely on pattern recognition and vulnerability feeds—all known issues—we’re always going to be a step behind.

By testing like a hacker, you’re able to deliver software faster, spend less time on testing, and be more confident in your security. Put together, they represent a significant reduction in developer burden and a significant increase in security outcomes. It’s the promise of DevSecOps, at long last.

{{code-cta}}

Add Mayhem to Your DevSecOps for Free.

Get a full-featured 30 day free trial.

.jpg)